Executive Summary

Years after three horrific mass shootings, videos created by the different attackers behind them are still present on social media and mainstream streaming services. Some services are facing challenges detecting and getting rid of these videos, and one, Elon Musk’s X (formerly Twitter), doesn’t appear to be trying to remove these videos with any consistency or rigor. After Hatewatch reported the videos, which feature mass murder, X responded that they do not violate their terms of service, then abruptly removed the videos after receiving a request for comment on this story.

Perpetrators of massacres in Christchurch, New Zealand; Halle, Germany; and Buffalo, New York, filmed themselves as their crimes took place and simultaneously livestreamed the murders to social media sites for others to discover and view. In each case, viewers have downloaded their own copies of the videos and posted them onto other social media platforms and websites, spreading the videos far beyond their original online footprints.

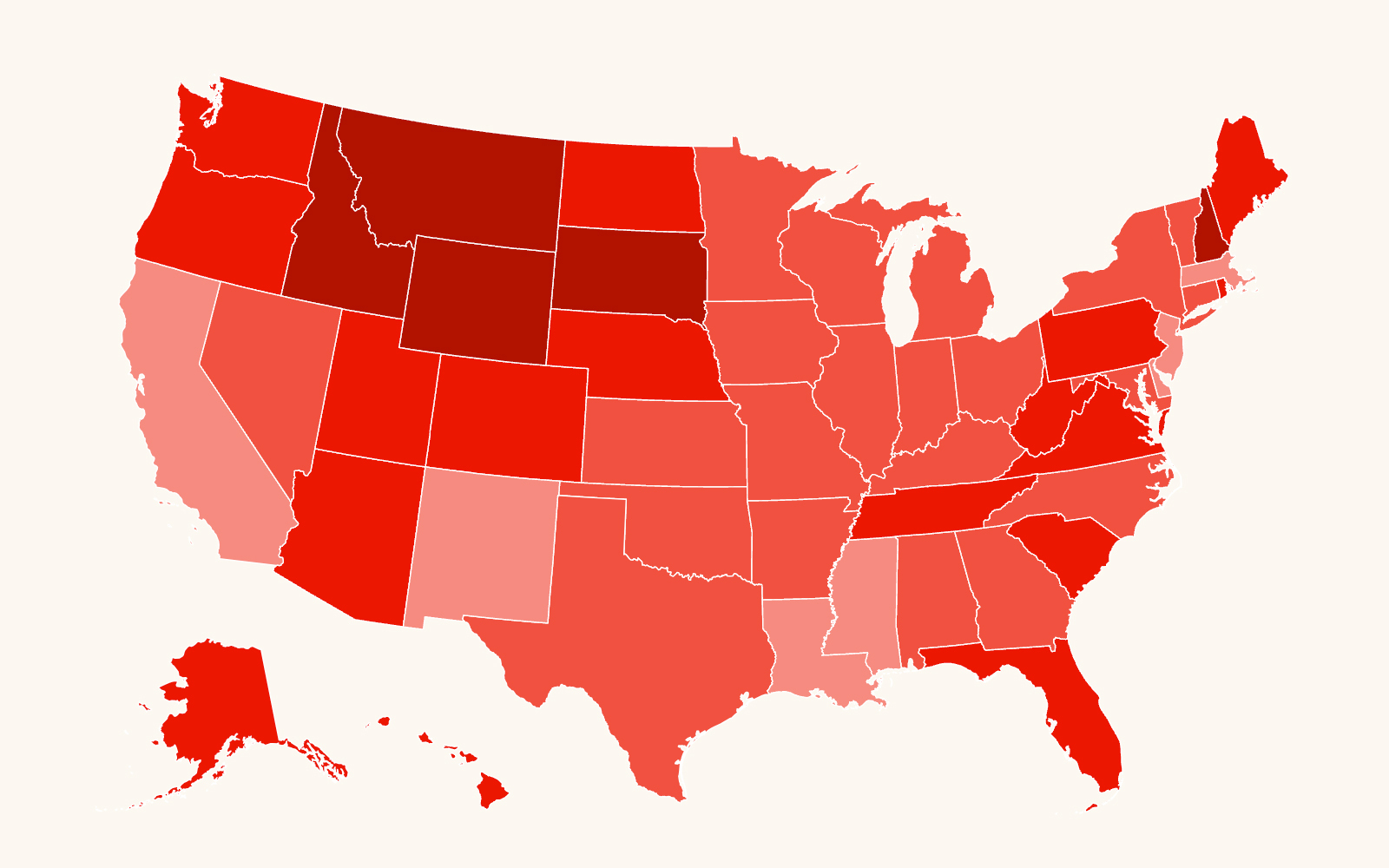

Using software from Pex, a content identification technology company that helps creators find audio and video content online, SPLC’s Data Lab tracked the spread of these videos across more than 20 platforms. We found at least 15,000 examples of mass shooter video artifacts from three incidents in a five-year period. This report details how users interact with the videos, such as editing them into video games or altering them as mashups or soundtracks to other content. Additionally, the video of the Christchurch, New Zealand, incident resulted in copycat behavior that carried over into multiple other incidents, some of which were also filmed, copied and spread on the internet.

The template: Christchurch

The livestream of two successive massacres at mosques in Christchurch, New Zealand, on March 15, 2019, was the first of its kind. The perpetrator, Brenton Tarrant, killed 51 people and injured 40 more during mass shooting attacks at the Al Noor mosque and the Linwood Islamic Centre, which he streamed on Facebook. During the video, the shooter made numerous references to various online subcultures, including invoking statements by popular YouTube personality PewDiePie, playing a soundtrack featuring songs that had been previously popularized on troll websites such as 4chan and 8chan, and showing off guns and accessories decorated with symbols and memes. Tarrant also released a written manifesto on 8chan filled with memes and subcultural references.

Facebook and other platforms responded by attempting to remove the video of the Christchurch attacks. But copies of the video spread across multiple other social media sites and video-streaming services. Using Pex’s Discovery tool, we found that more than 12,000 examples of the Christchurch video had been posted in the 24 hours following the incident.

The danger of ‘performance crime’

The Christchurch shooting is an example of so-called “performance crime,” in which the perpetrator records and disseminates the evidence of their crime as a means of self-promotion. The Christchurch video inspired subsequent shooters to commit similar crimes and to film themselves while doing so. This includes the Poway, California, attack by John T. Earnest on April 27, 2019. Earnest tried to livestream his activities but ultimately experienced technical issues that prevented this. He also posted a manifesto to 8chan, as Tarrant had done.

Other Christchurch-inspired incidents include the El Paso, Texas, attack by Patrick Crusius on Aug. 3, 2019, which included a manifesto posted to 8chan; the Baerum, Norway, attack by Philip Manshaus on Aug. 10, 2019, which was livestreamed to Facebook; the Halle, Germany, Yom Kippur attack by Stephen Baillet on Oct. 9, 2019, which was livestreamed to Twitch with an accompanying manifesto posted to Meguca, a website associated with 4chan; and the 2022 Buffalo, New York, attack, which was livestreamed to Twitch, was planned and announced on Discord, and included a decorated gun and a manifesto, also posted on Discord. Plentiful evidence from his own writings indicates that Payton Gendron, the perpetrator in the Buffalo attack, was inspired by both the beliefs and the methods employed by the Christchurch attacker.

How we studied the spread of these videos

We used software from Pex, a content identification technology company, to study the spread of these videos. Pex’s technology can find copies of a target video or audio asset across more than 20 social media platforms. It can detect matches in partial samples as short as one second, and in video and audio files that have been manipulated or distorted.

To serve as samples, we uploaded into Pex one copy of the Christchurch video, two different videos of the Halle attack, and one video from the Buffalo attack. All four of these video files also included audio recorded during the attacks.

The Christchurch video presented a unique technical challenge for this project because the shooter played music during much of the attack. The soundtrack consisted of five songs, some of which are freely available and part of the public domain, and thus have a high rate of use in unrelated videos. This resulted in over 50,000 unrelated music matches that we had to remove from our analysis.

Another challenge for this project is that Pex does not cover the “alternative” or “free speech” platforms where this type of extremist content tends to persist unabated. Examples of such sites include trolling and bullying forums such as KiwiFarms, social apps such as Telegram that have little content moderation, and websites that traffic in gore or shocking content. However, the findings from this report do give important insights into how this harmful video content would be experienced on mainstream platforms, which claim to have higher standards for content moderation and are favored by advertisers. The size of mainstream platforms also makes them subject to additional regulations, such as the Digital Services Act in the European Union.

What we found

In all three cases – Christchurch, Halle and Buffalo – we found the highest number of videos being posted on the day of the attack or the day after the attack. In all three cases, the majority of videos were posted to Twitter, followed by either Facebook or YouTube. Some of the videos were taken down in the direct aftermath of the incident, but as we compiled this report, we found dozens of cases of all three videos still available for viewing on multiple platforms, including X/Twitter, Facebook, Vimeo, Soundcloud, Reddit, VK, Streamable, Dailymotion and others.

Most of the videos posted in the days following the attack were direct copies of the originals. Some of these copies were shortened or had news broadcast-style chyrons or logos superimposed on them, but they did not differ substantially from the originals.

However, years after the attacks, some of the videos and audio samples we found had changed in notable ways. First, we found numerous cases of audio remixes in which the sounds of the attack were interleaved with music or sampled into other songs. Some of these new creations directly paid homage to the original attacks with titles like “Meme war at Christchurch” and “2:30pm in Buffalo.”

We also found videos that attempted to remix the originals into video games. Some of these overlaid the symbols of popular video games, for example the 1993 game Doom or the 2015 game Undertale, onto the original video. We also found videos that had been created as facsimiles of the original attacks, with the setting of the attack recreated inside building games like Roblox but overlaid with the original audio.

Finally, some of the videos that persisted well beyond the original news cycle were centered around “false flag” conspiracy theories purporting that the shootings were fake. Examples in this category include walkthroughs where a person critiques the original video, frame-by-frame, and explains their theories about why the video cannot possibly be real. These theories typically center around elaborate government conspiracies, or around alleged inconsistencies in the original videos, such as a supposed lack of expected noises, for example from shell casings hitting the ground.

The platforms respond

In the aftermath of the Christchurch attack, some social media platforms scrambled to remove copies of the video and manifesto. The Washington Post reported in the days after the attack that Facebook found and removed over 1.5 million copies of the video. But NBC News reported that six months after the attack – and after copycat shooters mounted additional attacks in Poway, California and El Paso, Texas – Facebook still hosted dozens of copies of the video, some of which had posted during the first week of the attack and overlaid with content warnings.

Now, five years after the Christchurch and Halle attacks and two years after Buffalo, we easily found these videos on many new platforms, including some that didn’t even exist at the time of the shootings, and we also found dozens of copies of the videos on platforms like X (formerly Twitter). Many of these copies were completely unedited from the originals.

At several points during this study, we attempted to report samples of the Christchurch, Halle, and Buffalo videos to Soundcloud, Vimeo, X/Twitter, Dailymotion, Facebook, Reddit, Streamable, Likee and VK.

Because each platform has different criteria for what violates its own terms of service, the reporting standards vary widely across platforms, as do the procedures for reporting. Some platforms require the user to create an account and be logged in to report content. Some use an automated chat function to submit reports. Some use Google Forms to capture the report details. Some require the user to type descriptions of the content and why it violates policies. Others require screenshots, links to the location of the content on their platform, or timestamps for where in the video the violative content is located. When responding to reports, some platforms use email, some have a notification or “support inbox” inside the app itself, and some do not respond at all.

Results of our attempts at reporting were mixed. For example, early in this study, we reported six copies of the Christchurch videos to Vimeo, and all six were removed within a day. However, when we continued collecting additional data a few months later, we found 10 more copies of the video had been posted to Vimeo, including two in the “video game” format and two of the conspiracy type. We reported these additional 10 copies, and Vimeo removed all of them within a few days. We had similar positive results with our reports to Soundcloud and the Russian social media site VK.

On X, the situation was quite different. Of the 12246 videos of the Christchurch shooting found by Pex on the day of the incident, 5251 of these (43%) were posted to X/Twitter. In the Halle and Buffalo cases, the numbers were similar: 55% of the Halle videos and 61% of the Buffalo videos were posted to X/Twitter in the first five days after the attack.

As we collected data for this report, we found numerous copies of the video still on X. We reported a sample of 13 of these videos, and after 17 days, X responded by separate emails to each of our reports, stating in each case that the Christchurch, Halle or Buffalo shooting video “hasn’t broken our rules” and that it would therefore not be removed. After Hatewatch reached out for comment on this story, 12 of the videos were removed.

The Guardian reported in November 2022 that X only removed copies of the Christchurch videos after being prompted to do so by the New Zealand government. Earlier that month, thousands of personnel, including from the trust and safety teams, were laid off from Twitter after Elon Musk purchased the company the month prior. By December, Musk dissolved Twitter’s Trust and Safety Council entirely.

Recommendations for limiting the spread of mass shooter videos

Because self-produced mass shooter videos serve as inspiration for subsequent incidents, these videos are inherently dangerous and must not be allowed to spread across the internet. Both mainstream and alternative platforms must commit to limit the spread of these videos. The data collected for this project shows that platforms can and should use video- and audio-matching technology to find and remove mass shooter videos from their platforms, even after the initial news cycle is over. It is also important for the platforms to use proactive methods of detecting the video and audio samples at the time they are uploaded rather than just relying on user reports.

The European Union implemented the Digital Services Act in 2023 to crack down on large platforms, such as X, which host this type of content. Under these laws, platforms can be fined up to 6% of their annual global revenue for non-compliance. X/Twitter failed a similar “stress test” of its systems conducted by the EU in 2023 to determine if the platform was prepared to be governed by this new law.

One potential solution is to include the signatures of these shooter videos inside a detection technology, such as GIFCT, that platforms use to proactively find and remove other types of terrorist content. GIFCT announced in 2022 that it had done this with these shooting incidents, and reported their findings in an annual transparency report. Nonetheless, it is clear from our study that some platforms, like X, either have not adopted the GIFCT technology or have not implemented it correctly, since these terror videos are still available on the platform.

Alternative platforms and niche websites such as Kiwifarms and 4chan are also part of the problem. These sites are the places where the shooters often choose to post their livestream links, and links to their videos and manifestos. People who want to spread the videos also upload them to these no-moderation platforms, knowing there is much less of a chance they will get taken down. The New York Attorney General’s office issued a report after the Buffalo shooting suggesting that lower-level infrastructure providers, such as the web-hosting companies and domain registrars that provide critical services to the platforms, deny these rogue platforms use of their services. Prior research has also shown that this infrastructure-level removal strategy can make it much more difficult for hateful and violent content to persist unabated.

Creede Newton contributed to this report.

Editor’s Note: This story has been updated to clarify the removal of unrelated songs during the data cleaning process.

Photo illustration by SPLC